Machine Learning [2]

Classification:Logistic Regression

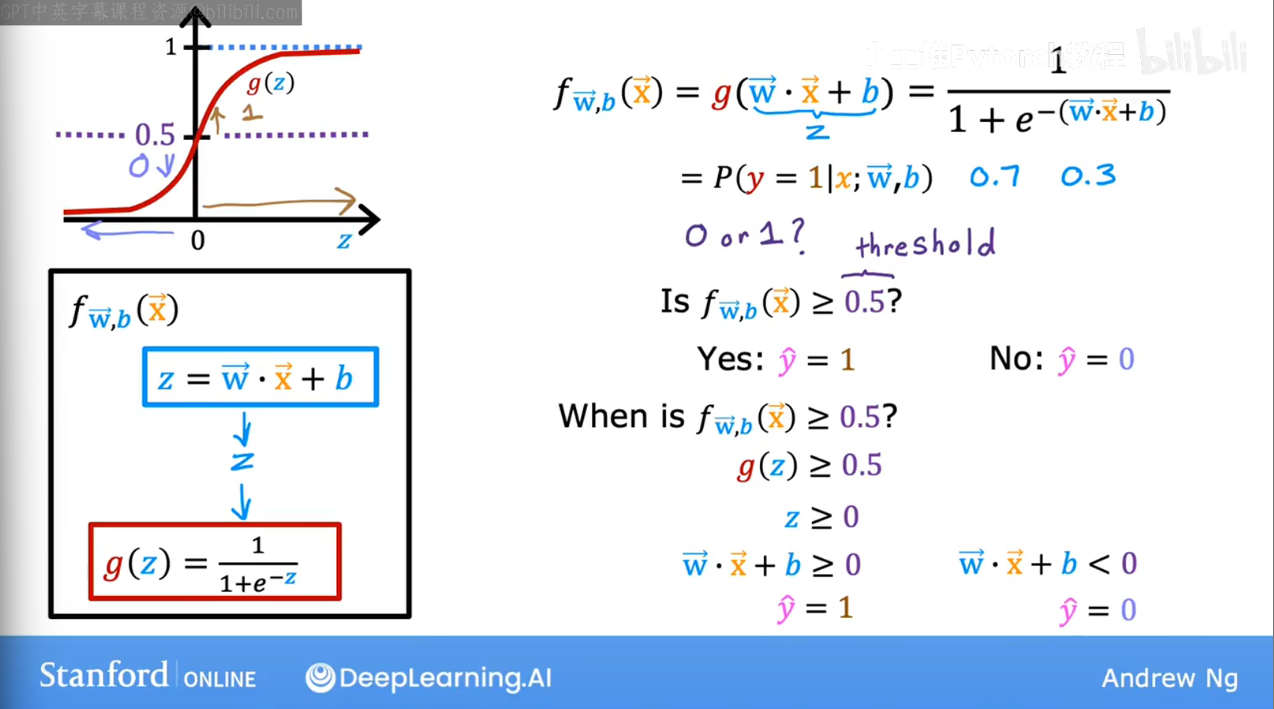

Let’s talk about the logistic function,and see how we use it to predict a classification problem:

For a classification problem,we have

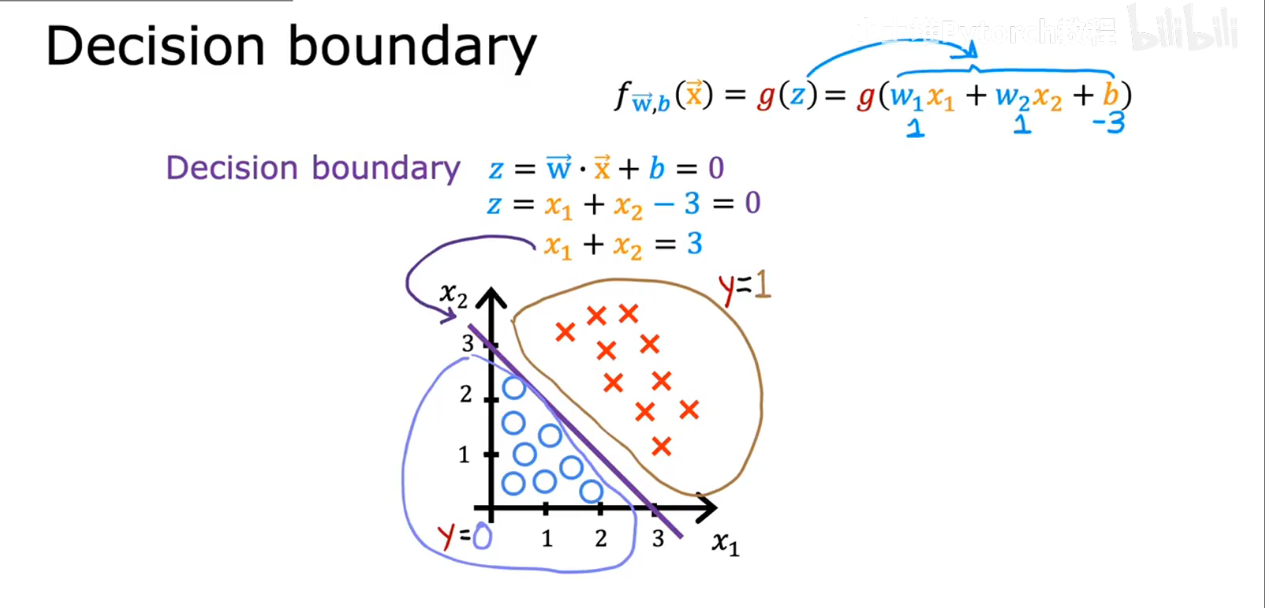

Decision Boundary

let

By replacing the linear

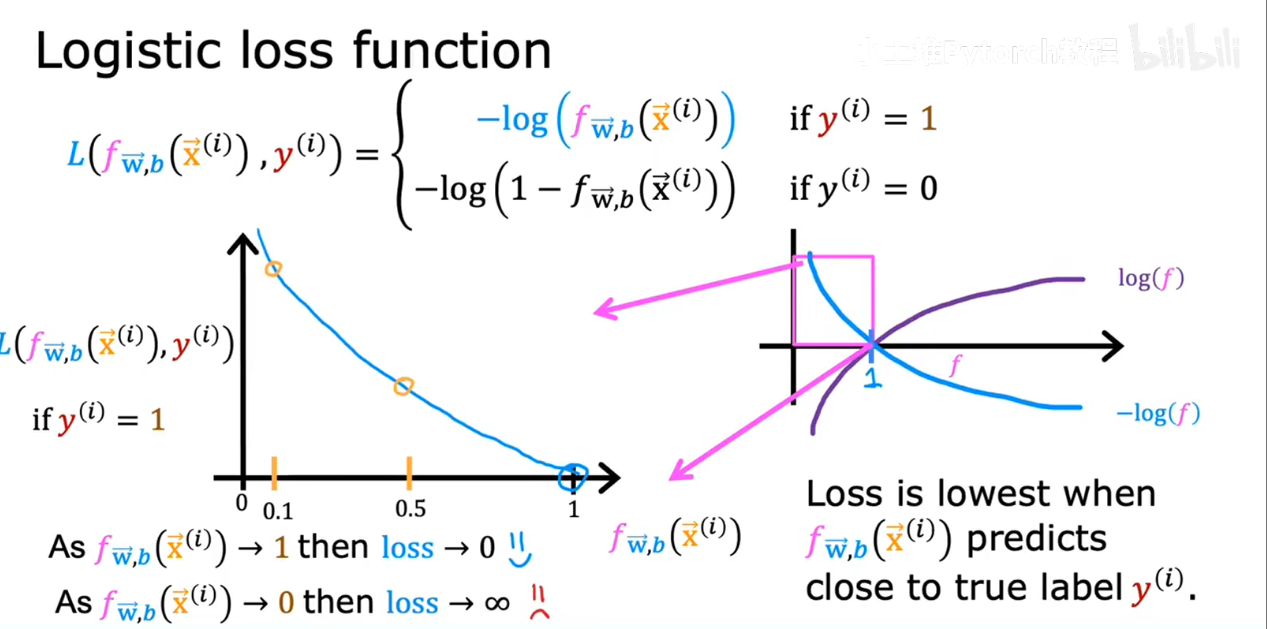

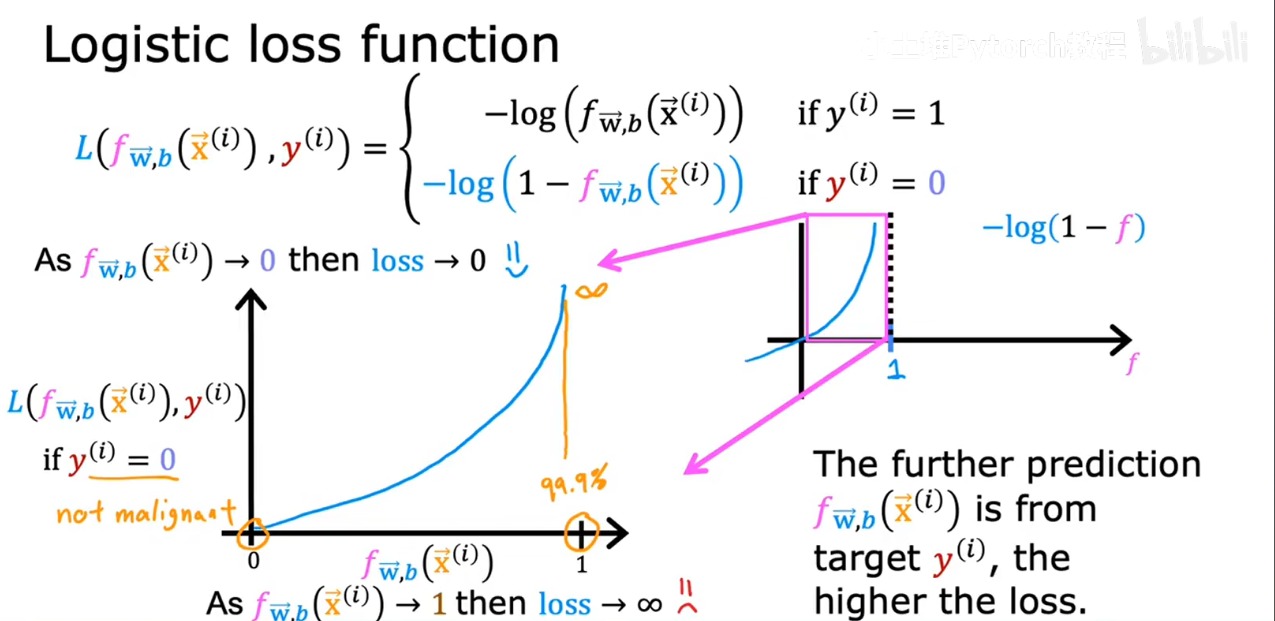

Cost Function for Logistic Regression

Training Logistic Regression by Gradient Decsent

To inplement Logic Regression by gradient decsent,we went through the same route as we did for linear regressions.

Overfitting & Underfitting Problem

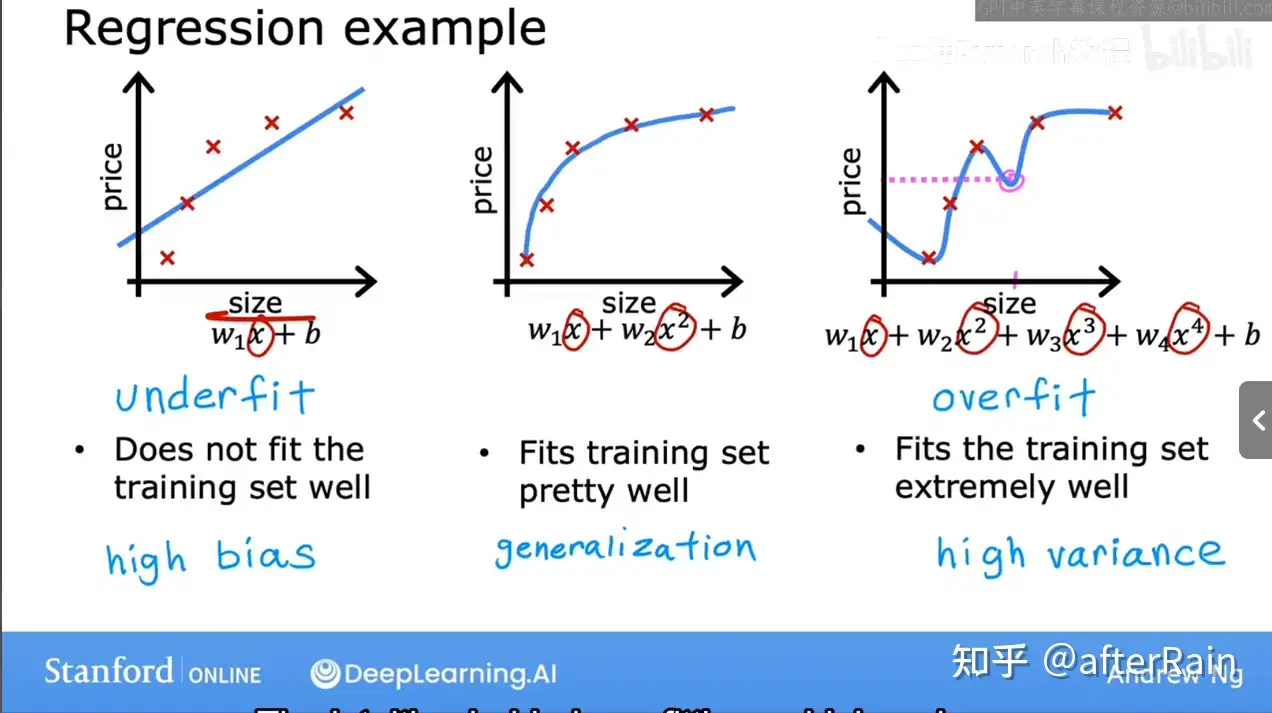

Example1: Underfitting(high bias) and Overfitting(high variance) in linear regression

Let’s take a look at the Housing Price Example.

We have a very strong bias that the housing prices are meant to be a completely linear function of its size, despite the data to the contrary.In this case we often overfit a problem.

We are very strict on fitting the training set. With higher-order polynomials,we can exactly fit every data in the training set, but fail to predict a good model for unknown input.

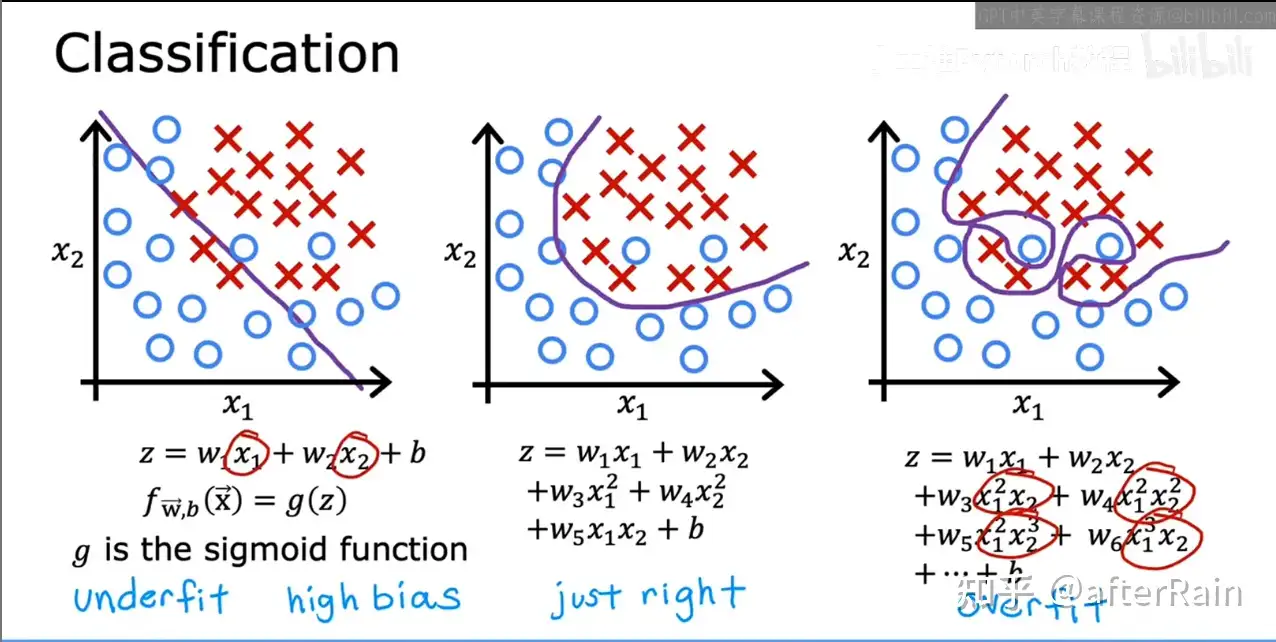

Example2: In classification

In the example of classify whether a tumor is malignant or benign.

By changing the

Addressing Overfitting

collect more training exam.

select features to include/exclude.

Regularization.(正则化)

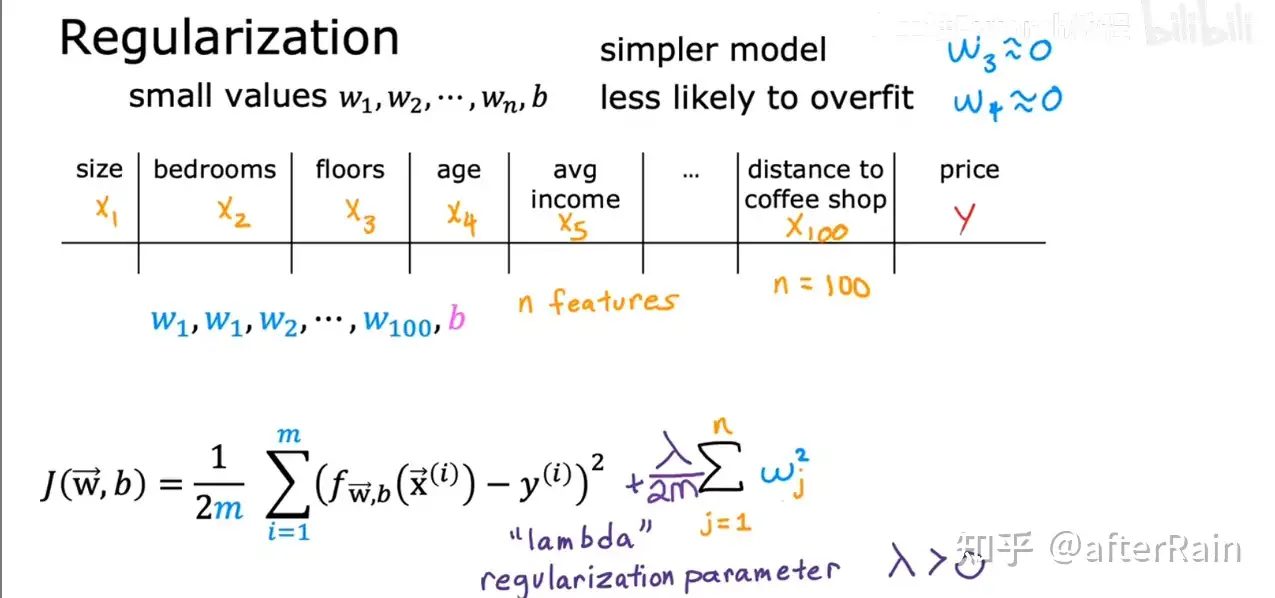

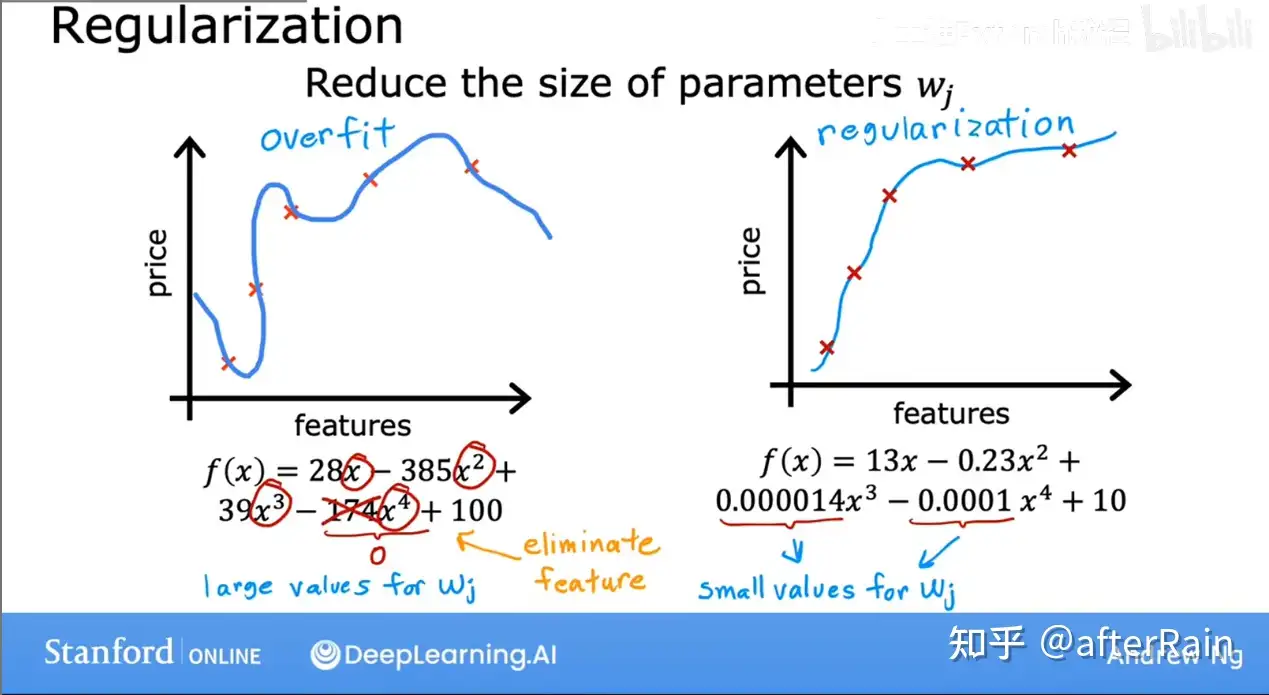

Regularization

A great idea is adding a penalizing function(罚函数.) to our cost function.

Say we are using

with the fitting model

If we don’t know which of the parameters are gonna be important ones, let’s penalize all of them a bit, and shrink all of them by adding this new term